Introduction of time series forecasting with sktime

Code friendly introduction/tutorial to get started with time series forecasting.

! pip install sktime --quiet

! pip install pmdarima --quiet

import numpy as np

import matplotlib.pyplot as plt

import sktime

from sktime.datasets import load_macroeconomic, load_shampoo_sales, load_airline

Y = load_macroeconomic()

print(type(Y), type(Y.index))

Y.tail()

from sktime.utils.plotting import plot_series

realgdp = Y['realgdp']

infl = Y['infl']

fig, ax = plt.subplots(nrows=2, figsize=(15,6))

plot_series(realgdp, ax=ax[0])

plot_series(infl, ax=ax[1])

import pandas as pd

s = pd.Series([0, 1, 2, 3, 4, np.nan, 6])

ax = s.reset_index().plot.scatter(x='index', y=0)

ax.set_xlim([0, 7])

ax.set_ylim([0, 7])

ax.fill_between([4,6], [7,7], alpha=0.2, color='orange');

s_interp = s.interpolate(method='linear')

ax = s_interp.reset_index().plot.scatter(x='index', y=0)

s_interp[[5]].plot(color='orange', marker='o', markersize=12, ax=ax)

ax.set_xlim([0, 7])

ax.set_ylim([0, 7])

ax.fill_between([4,6], [7,7], alpha=0.2, color='orange')

s = pd.Series([0, 1, 2, 3, 4, np.nan])

ax = s.reset_index().plot.scatter(x='index', y=0)

ax.set_xlim([0, 7])

ax.set_ylim([0, 7])

ax.fill_between([4,6], [7,7], alpha=0.2, color='orange');

s_interp = s.interpolate(method='linear', limit_direction='forward')

ax = s_interp.reset_index().plot.scatter(x='index', y=0)

s_interp[[5]].plot(color='orange', marker='o', markersize=12, ax=ax)

ax.set_xlim([0, 7])

ax.set_ylim([0, 7])

ax.fill_between([4,6], [7,7], alpha=0.2, color='orange');

s_interp = s.interpolate(method='spline', order=1, limit_direction='forward')

ax = s_interp.reset_index().plot.scatter(x='index', y=0)

s_interp[[5]].plot(color='orange', marker='o', markersize=12, ax=ax)

ax.set_xlim([0, 7])

ax.set_ylim([0, 7])

ax.fill_between([4,6], [7,7], alpha=0.2, color='orange');

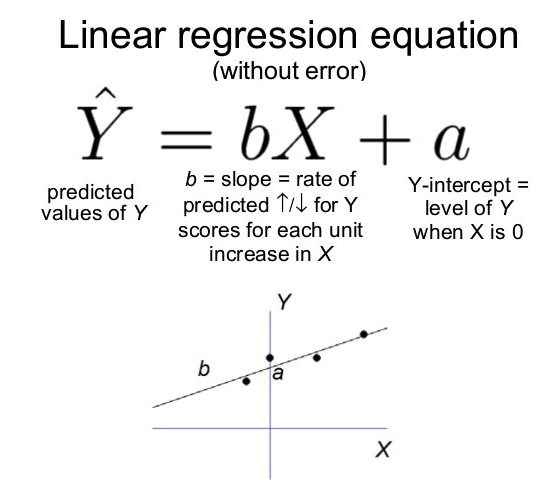

(Image source: A Friendly Introduction to Machine Learning)

Regression for time series involves finding/designing a good feature set ($X$) that predicts our target values ($Y$). This process is known in machine learning as feature engineering.

# ts -> FeatureExtraction -> X (features)

X = FeatureExtraction.fit_transform(ts) # backwards information or not!Lots of algorithms are widely used in the context of time series, including Linear Regression and tree-based ensembles such as Random Forest or Gradient Boosting.

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import train_test_split

from sklearn.pipeline import make_pipeline

from sktime.forecasting.model_selection import temporal_train_test_split

# X, y

X_train, X_test, y_train, y_test = train_test_split(X, y)

regressor = make_pipeline(

# FeatureExtraction

RandomForestRegressor(),

)

regressor.fit(X_train, y_train)

regressor.score(X_test, y_test)Convert index to pd.DatetimeIndex:

y = y.to_timestamp(freq="M")

y_train, y_test = temporal_train_test_split(y, test_size=36)Transforming a regressor into forecaster:

from sktime.forecasting.base import ForecastingHorizon

from sktime.forecasting.compose import make_reduction

from sktime.forecasting.model_selection import temporal_train_test_split

forecaster = make_reduction(

regressor, scitype="time-series-regressor", window_length=12

)

forecaster.fit(y_train)

fh = ForecastingHorizon(y_test.index)

y_pred = forecaster.predict(fh)from sktime.utils.plotting import plot_correlations

plot_correlations(

realgdp, lags=36, alpha=0.05, pacf_method="ywadjusted",

acf_title="Autocorrelation", pacf_title="Partial Autocorrelation",

);

plot_correlations(

infl, lags=36, alpha=0.05, pacf_method="ywadjusted",

acf_title="Autocorrelation", pacf_title="Partial Autocorrelation",

);

ARIMA as a strong baseline

ARIMA is an algorithm to find Autoregressive Integrated Moving-Average components and build a time series forecasting model. On its basic form, ARIMA has three main parameters to tune. How to find appropriate parameters for ARIMA (p, d, q)? The Box-Jenkins method was well-known as an approach to take the parameters from analysis on autocorrelation and stationarity.

- p -> Autoregressive components (a.k.a lags)

- d -> Integrative component (diff)

- q -> Moving average components (trend lags)

But there are lots of other subtypes of ARIMA models, such as SARIMA that takes into account seasonality and many others.

If you find ARIMA an interesting algorithm and want know more about it, there are many great videos online. Here we will use the (famously on R) AutoARIMA method, restricting parameters to avoid overfitting.

from sktime.forecasting.arima import AutoARIMA

from sktime.forecasting.naive import NaiveForecaster

y = load_airline()

# ARIMA

forecaster = AutoARIMA(sp=12,

suppress_warnings=True)

forecaster.fit(y)

print(f"ARIMA info:\n{forecaster.get_fitted_params()}")

y_pred = forecaster.predict(fh=np.arange(1, 13)) # forecast the next 12 months at once

# vs NaiveForecaster

naive_forecaster = NaiveForecaster(strategy='last', sp=12)

naive_forecaster.fit(y)

y_pred_naive = naive_forecaster.predict(fh=np.arange(1, 13))

fig, ax = plt.subplots(nrows=2, figsize=(15,6))

ax[0].grid()

plot_series(y, y_pred, ax=ax[0])

ax[1].grid()

plot_series(y, y_pred_naive, ax=ax[1]);

Other decision-making baselines

| Interpretation | Forecaster sktime |

|---|---|

| "Tomorrow will be just like today" | NaiveForecaster(strategy='last') |

| "Tomorrow will be close to the overall mean" | NaiveForecaster(strategy='mean') |

| "Tomorrow will be the mean of the last three days" | NaiveForecaster(strategy='mean', window_length=3) |

| "Next month will be as it was the same month of last year" | NaiveForecaster(strategy='last', sp=12) |

from sktime.forecasting.model_selection import temporal_train_test_split

from sktime.forecasting.base import ForecastingHorizon

y = load_airline()

y_train, y_test = temporal_train_test_split(y, test_size=36)

# plotting for illustration

plot_series(y_train, y_test, labels=["y_train", "y_test"])

print(f"Train: {y_train.shape[0]} points \nTest: {y_test.shape[0]} points")

fh = ForecastingHorizon(y_test.index, is_relative=False)

from sktime.forecasting.compose import AutoEnsembleForecaster

from sktime.forecasting.naive import NaiveForecaster

from sktime.forecasting.trend import PolynomialTrendForecaster, STLForecaster

from sktime.forecasting.exp_smoothing import ExponentialSmoothing

from sklearn.metrics import mean_squared_error, r2_score

forecasters = [

# ("trend", STLForecaster(sp=12)),

# ("poly", PolynomialTrendForecaster(degree=1)),

("expm", ExponentialSmoothing(trend="add")),

# ("naive", NaiveForecaster()),

]

forecaster = AutoEnsembleForecaster(forecasters=forecasters)

forecaster.fit(y=y_train, fh=fh)

y_pred = forecaster.predict()

# Compute performance metrics

metrics = {

'mean_squared_error': mean_squared_error(y_test, y_pred),

'r2_score': r2_score(y_test, y_pred)

}

print(metrics)

# plotting

fig, ax = plt.subplots(figsize=(15,6))

plot_series(y_train, y_test, y_pred, labels=['y', 'y_test', 'y_pred'], ax=ax);

from sktime.forecasting.arima import AutoARIMA

from sktime.performance_metrics.forecasting import mean_absolute_percentage_error

forecaster = AutoARIMA(sp=12, suppress_warnings=True)

forecaster.fit(y_train)

y_pred = forecaster.predict(fh = fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

# computing the forecast performance

metrics = {

'mean_squared_error': mean_squared_error(y_test, y_pred),

'r2_score': r2_score(y_test, y_pred)

}

print(metrics)

forecasters = [

("trend", STLForecaster(12)),

# ("poly", PolynomialTrendForecaster(degree=1)),

("expm", ExponentialSmoothing(trend="add")),

# ("naive", NaiveForecaster(strategy="last", sp=12)),

]

forecaster = AutoEnsembleForecaster(forecasters=forecasters)

forecaster.fit(y_train)

y_pred = forecaster.predict(fh = fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

# computing the forecast performance

metrics = {

'mean_squared_error': mean_squared_error(y_test, y_pred),

'r2_score': r2_score(y_test, y_pred)

}

print(metrics)

Take aways

- Interpolation, Regression and Forecasting are techniques that use diferent methods to make predictions;

- Our world is chaotic thus your time series forecasting task may be more complex (multivariate, etc);

- Model evaluation is crucial, including baseline analysis;

- Validation may require the support of a domain expert that can interpret the results;

- Applications can build expert systems (agents) that use predictions to act automaticaly (predictive controllers).

Themes for additional discussion

- Model-centric improvements: Machine learning, Deep learning, AutoML;

- Ethics, transparency, reprodutibility and interpretability;

- ...